The global pandemic and investments in mRNA COVID vaccines have accelerated worldwide interest in the field of synthetic biology--a field that unifies chemistry, biology, computer science, and engineering for the purpose of writing better biological code. In this podcast, "Genesis Machine" co-author Amy Webb and Senior Fellows Nick Gvosdev and Tatiana Serafin explore how these developments are leading to a new industrial revolution.

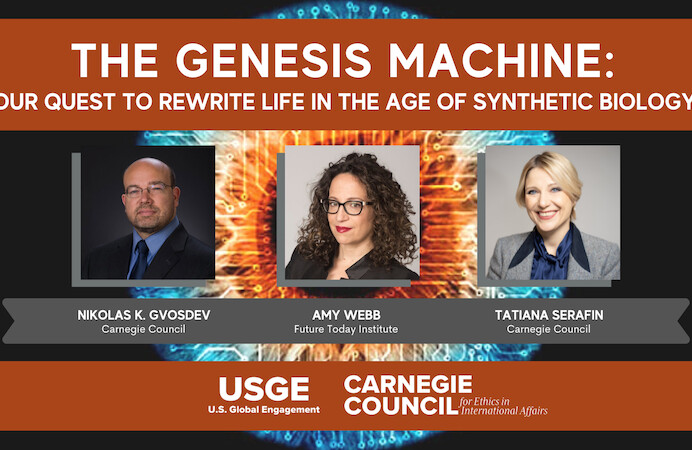

NIKOLAS GVOSDEV: Good evening, everyone, and welcome to this edition of The Doorstep book talk. I am your co-host, senior fellow here at the Carnegie Council Nick Gvosdev.

TATIANA SERAFIN: And I'm Tatiana Serafin, also a senior fellow at Carnegie Council.

So very, very, very excited to welcome Amy Webb, co-author of The Genesis Machine: Our Quest to Rewrite Life in the Age of Synthetic Biology. If you have seen The Doorstep book talk before, you know that I love reading, and I love this book so much. She will be speaking with us today about this wonderful book.

I am going to start the intro by saying, Amy, I saw you speak a few years ago speak at an Online News Association (ONA) conference, and I was so inspired by your talk and the Future Today Institute.

A little bit of background on Amy today. She is the CEO of the Future Today institute, which is in the business of collecting data, modeling data, finding patterns, and describing plausible futures, which is what this book is also about, and we will talk a little bit about how you put that also in the book. You are a professor of strategic foresight at the New York University Stern School of Business.

Although I want to talk about your work as a futurist, we will try to stick to the book, and we invite the audience to ask questions as we go along. You co-authored the book with Andrew Hessel, so I want to give him a shout-out too, a geneticist and an entrepreneur. The two of you together have created such an inspiring read about an industry that is set to really explode. I know in the book you talk about it being at its very beginning, sort of like before we had the telephone; but the pace of change as we know in tech is super-rapid.

What we are talking about today for our audience is synthetic biology (SynBio) and how it is going to change the way we live, the way we parent, the way we eat, and the way we identify. There are so many applications of this technology that we are at the cusp of.

But we need to do a lot of work to understand it. Amy, I love the fact that you talk about how the book is your foray into helping start the conversation among regulators, entrepreneurs, money people—all the billionaires who are in this space—but also the regular day-to-day people. What we try to do here at The Doorstep is to bring issues to people that they should be thinking about and maybe don't understand or are misinformed about.

You talk a lot about misinformation, and we will get into that in our talk tonight too. As a journalist I really am anti-misinformation and I am pro-news literacy. It is so interesting because you talk about bio literacy. I want to talk about that with you too tonight. There is just so much.

We will get started, and I would like to start off because I do think some of our audience might not be familiar with what is this new growing field of synthetic biology. Maybe you could just give us a little bit of a lay of the land to start us off, and then we will delve into your book a bit more.

AMY WEBB: Sure. First of all, thank you so much for having me. It is great to hear that you were at ONA, whenever that was. I used to be on the board of directors, so it is an organization near and dear to my heart.

And thanks, everybody, for being here to learn a little bit more about this book that Andrew and I wrote about a somewhat esoteric emerging area of science and technology, but a field that you will be hearing much more about pretty soon over the coming years.

The backdrop to this book is that Andrew is a scientist and I am a person who works in strategic foresight. We both think a lot about the future. What I do for a living is model signals, forces, and trends and try to understand their next-order impacts and the effects that those would have on government, business, individual companies, things like that.

There really is one area of change that I think is so profound and has potentially such a dramatic impact across so many industries that I decided to write a book about it, and then about midway through I asked Andrew if he would like to join.

The book is about something called synthetic biology. It is totally fine if you haven't heard this phrase before. Synthetic biology is the name for an emerging area of science that involves redesigning organisms for useful purposes by engineering them to have new abilities or adapted or edited abilities. That can range from something as small as a molecule all the way on up to something as big as an elephant.

Synthetic biology is interdisciplinary. This combines engineering, design, and computer science, all with the intent to tinker with biology in new ways.

What is interesting about this is if you have used a computer before, this is analogous to the permissions that we have with computer code, except we are talking about biological code. We have "read access" to documents and we have "write access" to documents. Similarly in biology, when Watson and Crick built on—without giving credit to—the research of Rosalind Franklin, that gave us the insight that there is a double helix, and that set us on a path to being able to read the genome, genetic sequences.

More recently there is something called Clustered Regularly Interspaced Short Palindromic Repeats (CRISPR), which is a technique that allows us to edit. What synthetic biology does is give us write permissions. Using a computer, scientists are able to edit sequences, put them in new combinations, test out new things, and effectively send that computer code to something like a three-dimensional printer, which prints out, as crazy as this sounds, new living organisms.

While we probably immediately think that this means creating "designer babies"—and genetic engineering on humans is definitely out there but on a slightly longer horizon—more importantly what this means is optionality. It gives us different ways to approach agriculture, to approach drug discovery and therapeutics, and even to help mitigate some of the challenges of climate change.

There are enormous risks, but also lots of opportunities, and the purpose of the book is to give you the knowledge, the background, and the insights now so that when we start to confront these risks and opportunities we can have a reasonable public discussion and make good decisions that are in everybody's best interests.

TATIANA SERAFIN: I think that you really lay that out. For me it was your whole discussion on the analogy to artificial intelligence (AI) and how that all came to be. Now we are reacting to it versus getting ahead of it.

Maybe you can go into that analogy and some of the things that you think we should do to mitigate the risks. In the book you have nine risks, but it is so optimistic. I want to say that you pose it in a way that doesn't get people scared of this idea that you can edit a gene and create something new.

I am going to have to read this because I love what you said: "We have had creator control for a long time." This isn't something new that we are doing to the environment. We have been playing the role of the creator.

"This is the world we created not through intelligent planning and intentional design but through mindless evolution made through centuries of choices and actions which continue to play out in unpredictable ways"—as related to climate change, for example, or our food chain system—and now we can step back and say: "Wait a minute. For centuries we haven't thought things through, we have been reactive, but now let's be proactive."

AMY WEBB: There is a lot in that question, so let me see if I can unpack some of it.

You mentioned artificial intelligence. What is very interesting is that synthetic biology is kind of an umbrella term that encompasses different technologies and the greater bioeconomy. There is an interesting overlap between artificial intelligence and this field, and that is because computers are involved, as is automation and things like making predictions and solving problems. So there is computation involved.

The more powerful our AI systems become, by default the more powerful our biological tools become. A lot of people don't know this, but there is an overlap between the technology companies building the forefront of AI, the investors who are funding AI, and the technology companies and investors who are now in synthetic biology. So there are a lot of the familiar "usual suspects."

Even companies like Nvidia, which works in computer processing, image rendering, image generation, and computer vision, are also working in SynBio, as is Salesforce. Many people recognize Salesforce as the global juggernaut in keeping track of who's what in what is called customer relationship management (CRM), so knowing people. Well, it's interesting. They also have a segment of that organization dedicated to this type of research.

What does that mean for the future of how we think about evolution? Again, technology is impacting and has some influence on human evolution. It certainly changed society, even down to things like eyesight. I was looking at some data a while ago. People are significantly more myopic—kind of a double meaning there—we are definitely more myopic, but biologically we are also more myopic, which is another way of saying nearsighted, than ever before. The number of people who need corrective lenses is accelerating at a faster pace than ever before in human history, at least as long as we have been aware that people need corrective lenses.

Why? Well, pre-COVID-19 we were already spending a lot of time on screens and our eyes were initially evolved to look for predators, so things that were far away that might attack us and eat us. Our eyes have not evolved as fast as the technology around us, and as a result we cannot accommodate looking near and far over and over again at the same time in lights. What does that mean? Everybody has to wear more glasses. So technology has already started to influence our biology, and that is one of many examples.

But it is interesting for us to think about using technology to influence biology itself. One of the arguments we make in the book is that oftentimes when we talk about editing or creating new living structures, new life forms, in whatever form they may take, there is a religious and spiritual element to this and it challenges our current mental models.

The argument that we are making is that through our decisions—whether that is to stare at a computer or a phone all day long or to make decisions about how we are planting things or how we are emitting CO2—we are very much playing the role of creator; we just don't think about it that way. What we are advocating is that as we pursue this new avenue of technology and science we just get more smugger about that creator position and the outcomes that we want to see.

NIKOLAS GVOSDEV: Can I touch on that? You mentioned a number of areas where synthetic biology really backs into core doorstep issues, particularly for politicians around the world—food supply, energy; dealing with pandemics and the extent to which the rapid ability to develop vaccines for COVID-19 that is tied to this new technology that wouldn't have been available 20 years ago when we first had the first wave of Severe Acute Respiratory Syndrome (SARS) and other similar coronaviruses.

But then the question, as you said, is: Who is actually in charge or who is actually doing this? It really reminded me of the Doorstep podcast that we did with Christian Davenport about space, that governments are really playing more of a backseat. There are investors, there are people in the tech field, and there are visionaries, but it does seem that a lot of this is occurring not as something where societies have made choices but that there are people making choices for societies who then, as you said, either decide that societies will have to adapt to these or that may produce a reaction.

Is there a sense as you have been covering this of the extent to which for the people and the companies that are most involved in synthetic biology is it just simply a matter of "We're trying to solve certain problems," "We see a profit stream;" or are there people saying, "This really allows us to sort of set a direction for human society that isn't being set by governments?" Again I am thinking of the Peter Thiels or the Elon Musks who really say we want to take human society out into space, out to sea, or elsewhere in different ways. But really who makes the call?

You did raise the question too of philosophical and religious movements as well. Is this a way that we are seeing power shift away from traditional forms to those who will be able to have these tools at their disposal?

AMY WEBB: I think the challenge that we continually face is that our form of government asks people to make choices about who they want in various positions, and that is a good thing. It also means that it is very difficult to set long-term strategy, and the bottom line is that the more vitriolic our politics gets in this country the further away we get from long-term visioning. We are at a moment in time where without that long-term vantage point and the wherewithal to stick to it, we become globally less competitive. It also creates a strategic vulnerability when it comes to diplomatic, militaristic, and other avenues.

The solution to that is either erecting a national office of strategic foresight—which I have suggested and written a paper on, and someday maybe our government will adopt it—or to figure out a way to do some of this long-term planning. Every big firm is looking at this space. Many are already invested in this space. We are sort of at the building-the-foundations stage versus the end-consumer product stage.

We have a very haphazard approach in the United States. Just like our government really wasn't thinking through the long-term trajectory of artificial intelligence until very recently, the same is very true when it comes to this space.

However, that is not the case in China, where both AI and SynBio and also biotech-related industries are part of their fourteenth Five-Year Plan, it is part of their strategic roadmap, part of their sovereign wealth fund is dedicated to this space. We see similarities in places like Singapore, which has already pushed through regulatory approval to allow synthetic meat—cellular-based meat products versus plant-based meat products—to go on sale. We are seeing movement outside of the United States. This is bad for a couple of reasons.

One is this is an area that involves life, so it involves cells, and to some degree stem cells, so when we have administrative changes and we have people who are maybe leaning more to the right in power, that completely upends policy. It also changes funding. It completely collapses the existing roadmap for how this stuff develops. This is coming at a really challenging time because there are things that are happening. We also have some needs, and we are also just falling behind everybody else.

There is another piece of this, which is this technology does potentially give us optionality in agriculture. Agriculture, depending on who you talk to, hasn't changed much in between 14,000 and 10,000 years; there really haven't been any fundamental changes. This technology gives us the ability to influence what that future looks like, and we are going to need to do that because there is no way in hell that all countries around the world are going to align on CO2 reductions. The economic forces and the headwinds against that are too strong.

Isn't there another way for us to think through the outcomes of that? What we do know to be true is that extreme weather events are going to increase, not decrease. We have these external variables that we know, that we have data for, and that we can chart—we don't know the outcomes, but we know they exist—so that tells us that we have a global food supply problem somewhere on the horizon. That should be an incentive to do long-horizon planning to bring different approaches.

Like bring a lot of farming indoors. When you talk to farmers, they say: "Don't tell us how to do our jobs. That's not possible." I know that it is. I also come from a part of my family who were commercial farmers, so I know. This is different, and we don't have strategic direction at a national level on how to think this through.

And then, worse, let's say that we do significantly innovate in this space. That is going to start to create new geopolitical problems. There are countries that rely on our exports, there are countries that rely on our imports, and if suddenly we shrink the global supply chain down so that agriculture and agricultural production looks really different, that could create a new security problem. And, by the way, that is one tiny, tiny segment of what our government should be discussing and thinking about but isn't.

All of these things are addressable now. If we had the political will to do long-term planning and to stick with that plan regardless of who is in office, then we would be rowing a boat in a positive direction all together to the future. But we're not doing that and there are other countries that are.

TATIANA SERAFIN: I think the China example—and you use it a couple of times in the book—is a really interesting one. We talk here at The Doorstep a great deal about China and its influence and implications in so many sectors here in the United States that people do not really realize.

In this particular instance it is not just that they will get ahead of us. It is also this idea—and you mention it in the book—and it is one of the extreme examples of how synthetic biology could potentially be used for what you call dual purposes; there is a good part and a bad part. That is one of the nine risks that you mention that got me thinking of the nine because there is great opportunity as we have discussed, but there are also these drawbacks, especially in the China example.

Can you talk our audience through that example that you have in the book?

AMY WEBB: There is dual-use and then there are the challenges presented by China, so let me separate the two of those.

With every new science and technology there is always the possibility that somebody is going to take that science or tech and use it for nefarious purposes. We call this dual-use, and it has been ever present.

The example that I give in the book has to do with the invention of chemicals that were later used as chemical weapons. To be fair, I could use a fork and stab you in the neck with a fork, and a fork would be a deadly weapon if I stab you with it. Most of the time that is not what's happening and we're eating with forks.

That is a glib example, but it does highlight the challenge here, and that is to acknowledge risk that is plausible without sensationalizing it, and sometimes, especially now with the way our communication happens virtually and digitally, that it is harder and harder to do.

In this case, if we are talking about editing organisms, of course there is the potential for dual-use, either intentionally or unintentionally. If you live anywhere on the East Coast, you have probably seen in the summertime—and this is starting to happen now—trees covered by a horrific invasive vine. That vine is called kudzu, and kudzu was introduced into the United States just after the Dust Bowl. The reason for this was it was meant to be a solution; it was meant to re-wild and re-green areas that were devastated after horrific droughts. Well, nobody really thought through the next-order impacts, and the next-order impacts are now abundant. There is so much of that vine growing in so many places that it has choked off the vegetation that was originally there. That is an example of an unintended, unforeseen use case. That is dual-use.

When it comes to biology, biology tends to self-sustain. At the moment it is not really possible for us to engineer an "off switch" at scale. That is something for us to consider. Now, is it likely or even plausible that somebody might engineer intentionally the next kudzu that infiltrates other parts of the country? Probably not, but we don't want to rule that out.

There is intentional dual-use, where somebody intentionally takes a technology and weaponizes it. One of the arguments that we are making in the book is that that is very possible, and it is possible that that happens at a state level.

We know that in China—we have lots of evidence in the book describing all of this—we know that there is research happening that probably would not pass muster in other parts of the world.

We know that something called "gain-of-function" research is being practiced in various places around the world. In 2011 one of the stories we tell is a researcher in Rotterdam announced that he had augmented the H5N1 bird flu virus so that it could be transmitted from birds to humans and then between people as a new airborne deadly strain of the flu. You would ask yourself literally: Why would anybody do that? The answer was because he was trying to, in his words, "mutate the hell out of the virus to see how bad it could get" in the name of predicting mutations and developing cures. Pre-COVID-19 H5N1 was the worst virus to hit our planet for humans since the Spanish flu, and it had a super-high mortality rate, but it was nothing like what we are currently dealing with.

There is no reason today to do gain-of-function research. It's a stupid thing to pursue. We have predictive models. DeepMind, which is part of Google, has figured out every way the protein folds. There is no reason to do this type of research other than for use as a bioweapon.

One of the concerns I certainly have—we make the case in the book about this—is that we don't want to be in a situation where there is a new form of bio-escalation, and the perception of one country enhancing anything for competitive purposes or to make its security stronger, whatever it might be, is really dangerous. To me that is much more concerning. I was a 1980s kid, so I remember the Cold War very well and I remember being terrified of nuclear war. As scared as I was about that, this is a completely different beast, much more concerning, but also avoidable.

NIKOLAS GVOSDEV: You talk about again that other states have these capabilities and they may be moving in different directions. To what extent do these technologies in synthetic biology begin to move out of the hands of large organizations? At what point do they begin to drop to the level that smaller groups of people or smaller businesses—but also, as we saw in 1995 with Aum Shinrikyo in Japan say, "We want to engineer an apocalypse"—to what extent do you see the risks that this technology, as with AI and other things, can scale downward to where more and more people can have access to it?

AMY WEBB: I used to live in Japan, and I actually moved there about 18 months after that horrific sarin gas attack, and people were still reeling from it all that time later. That was a case where they didn't engineer something new, they just acquired something that existed. Again, anybody can acquire tools that are disruptive.

The question you are getting at I think is: How easily accessible is this technology to individuals, to rogue actors, to people who want to inflict real damage on others?

There is still quite a bit of a learning curve here, and there still are lots of processes and procedures that have to be followed. You can't just print anything you want, you still have to get reagents, you have to get materials; so the probability that somebody engineers something bad is orders of magnitude lower than somebody acquiring an existing dangerous agent and releasing that in a way that is harmful.

I am actually not super-concerned at the moment about individuals or small groups making use of these technologies. I am actually much more concerned that we are going to drag our heels on this stuff for so long that when some breakthroughs happen lawmakers are going to wake up from their slumber and decide at that point to regulate. In the United States I just think that would be catastrophic.

We are facing existential threats. The argument over who caused what doesn't at this point matter to me. We have a climate change problem—like there is no other way; it is—so let's acknowledge that and put the considerable might, will, and finances of the United States into solving that problem using alternative solutions, which we are not currently doing.

My concern has to do with missed opportunities that wind up becoming policy problems, legal arguments, and craziness. That concerns me. The fact that we have intellectual property (IP) problems. There is a chapter in the book about something called "golden rice," which I am sure we will talk about at some point. The end of that story is that this was a product that was intended to be given away, the patents were intended to be given away—much like the original patent for insulin was intended to be made public so that anybody could manufacture it and help people—but when it came to this rice product, the IP on this became so insane that there was no way for them to release the code and the process for free because it would have infringed on like 70 patents.

Likewise in the past few months the U.S. Patent and Trademark Office reversed its decision on who owned patents for CRISPR, whether it was Berkeley, a public institution, or the Broad Institute, a private institution.

This continual back and forth and this murkiness that is in some ways unique to the United States concerns me. That concerns me, and then obviously misinformation concerns me. Honestly I am much more concerned about that at this point than I am about some guy getting his hands on synthetic biology equipment and materials and trying to engineer something.

TATIANA SERAFIN: Let's stick with the patent issue because it's the patents here and globally. A very effective argument you make in the book is that we need to create a global discussion, a global group—you call it a "Bretton Woods" for this area—where we have a consensus about how this is going to be managed, from who is going to manage it to patent and structure. Talk to us a little bit more about that.

AMY WEBB: Again, we have a challenge when it comes to IP and regulation specifically because countries are misaligned on what both are. In the United States we tend to regulate products versus process, and there is good reason for that: The government wants to encourage growth and innovation, and nobody wants to regulate how scientists are doing their work in the lab. All of that makes sense.

However, we are in the middle of using and creating new processes that have never existed before, so turning a blind eye to that until there is a big inflection point is an invitation to challenging people's expectations, and it invites problems down the road.

It is much better for policymakers to be thinking through how our regulatory framework is going to have to change. We have something called the Coordinated Framework for Regulation of Biotechnology, which is like three different agencies' regulations kind of kluged together, and that is what we're doing. It is the year 2022. That's how we have chosen to operate this.

As murky as things are in the United States, they are equally murky all around the world, and there is not a lot of alignment. There are global discussions starting to happen. The World Economic Forum has a Council on Synthetic Biology and they are trying to think through what that looks like. Eric Schmidt through his foundation Schmidt Futures—Erich Schmidt was the former CEO of Google and then Alphabet—this is something that they are trying to galvanize a global conversation on.

But global conversations have to lead to actions, and we don't have a framework for this. There is the Biological Weapons Convention. What we don't have is a framework that encourages innovation and enables people to profit while also setting a strategic roadmap as well as guardrails and guidelines that are enforceable. We don't have that. We are not incenting collaboration.

The model that we propose is based on Bretton Woods. My academic background is economics and game theory, so that is to some degree where some of this is coming from. I really spent a long time trying to find an analogous model of some way to bring people to the table, to incent governments to make good decisions, and that was the best model that I could find.

The idea here is if the countries that are members of this are all incentivized to align and collaborate and the penalties are great for those who deviate, then I think we have something and we have a way to work and to move forward.

We actually have lots of recommendations that range from how there should be a global consortia that is accountable and working toward decisions in a quicker way than typically happens, all the way down to individual levels.

We talk about George Church, who is a preeminent geneticist who said: "We have to have a license to drive a vehicle. Maybe we should have to have a license to operate synthetic biology." We agree. We would take that many steps further, and we explain how in the book we think the right approach is to enable people to do their research and to really innovate while keeping a watchful eye on what they are doing and how, to make sure we have good, effective safety standards.

TATIANA SERAFIN: We have addressed climate and food supply. I think one of the other areas—and something that really resonates with me—is the positive potential for health.

As a cancer survivor, I really loved the description of creating a specific cure or solution for your particular cancer because everybody's cancer is a little bit different. As opposed to going in, getting your chemo, and then the doctors adjusting it, how about we do a screen beforehand and then give you the appropriate diagnosis and solution? That to me was a really tangible example—again bringing it to the doorstep—of how synthetic biology can help improve our lives.

Are there other examples for our audience to bring it home into this sector that I think is also super-important, this health and wellness sector, that you can talk about?

AMY WEBB: This book is deeply personal. It opens with a very intimate look into my life and into Andrew's life. We bring up health issues throughout.

I am not spoiling anything by explaining this, but the book opens with Andrew and I separately trying to get pregnant. In my case I was told repeatedly that there was nothing wrong with me. I was pregnant nine times, and I have had one child. All eight of those other pregnancies resulted in miscarriages that ranged from after three, four, or five weeks, to a miscarriage much later in the process, to a pretty horrific miscarriage in my second trimester that resulted in surgery and was just awful.

Clearly there was something wrong. I am very healthy. I was not drinking, I was not smoking, I was following guidelines, I was doing everything that I was supposed to do. Something wasn't right. The promise of gaining these keys, gaining "write" access to life, is that somebody like me would potentially have answers and also options, different ways to create the family that I was trying to create.

There are other places throughout the book where we both talk about health. My mother had a very rare form of cancer, neuroendocrine cancer, and she saw specialists at Mayo and she went down to MD Anderson in Texas. We have come very far, but there is so much that we don't really understand about our own biology. In her case she was on a cocktail of chemotherapies; there wasn't one designed specifically for her.

In that case, we are not there yet, but there is a lot of research being done in things like creating a virus—viruses get a bad rap, and a lot of them are very bad—but another way to think about a virus is a USB stick or a thumb drive, that little thing that you plug into your computer to transfer files. A virus is just a container for code.

It's plausible that ten years from now for somebody with the same type of cancer that my mom had we would create a virus that would deliver different instructions and reduce the tumor or the cancerous cells from growing or cause them not to exist.

There is work being done on longevity and on repairing cells in a way that allows us to get old without aging, if that makes any sense. These are just very different approaches. Again, this is the kind of thing that challenges our mental models, but the options on the other side of this are profound.

There is a lot in the book about what all of this potentially means for the future of how we live, how long we live, and how we mitigate and manage the biological issues that challenge us.

TATIANA SERAFIN: I have to go into one of your scenarios. The book is broken up into history, risks, and scenarios. Since we are talking about aging, the scenario you have in there about aging really struck me. Your point in this entire conversation is that we need to start thinking through this now because it sounds great—I am going to have all this collagen, I'm going to look fabulous, and I'm going to live until 200—but the implications of that are so profound for the economy, for politics, for everything.

Without giving too much away, could you tell us how you created these scenarios? That was one that totally struck me as something that would resonate with our audience.

AMY WEBB: Let me preface this by saying there are different types of scenarios. The scenarios that I would normally prepare with my team for a client are strategic, which are seeking to answer questions about plausible alternative futures, and there are normative scenarios and there are exploratory scenarios. The point is those are intended to be exploratory but pragmatic, rooted in data.

There is another type of scenario, and that is a scenario that is more speculative, which seeks to force you into a state of reperception. Reperception is seeing the white spaces around you, seeing what currently exists in a new light. This is effective because again we tend to be pretty biased and we tend to continue on a linear path oftentimes. This active reperception helps you imagine alternative futures, and then you can choose what to do.

The scenarios in the book are all very researched, they are all backed by data, but they are speculative. They are meant to sound like science fiction, although they are very much not fiction. I mean, they're fiction, but they're not like science fiction. There are five of them, and each of them explores a different cluster of topics.

I spend half of my week in New York, and I am a longtime reader of New York magazine. Those of you who are readers or foodies probably know Adam Platt's Where to Eat guide. I was trying to imagine in this world where we can engineer tissue, meat, wine, or beer, what might a future Where to Eat guide look like?

We fast-forwarded several years and wrote a guide. The guide has things like the best new biofoundries, who has the best bioreactors, and it is written just as you would read a restaurant review or a where-to-eat guide in your local newspaper, magazine, that sort of thing. The reason it is effective is because it's familiar but it forces us into a different state of mind, a different perspective.

There is another one on aging and what happens when this technology gets applied to allow us technically to live longer lives. Would that be evenly distributed? What happens to sports in a world in which people can live ultra-long lives? What does this mean about political appointments that are for lifetime terms?

What does this mean for succession plans? I will admit that at the time I was writing that scenario I was also watching the show Succession, so that was to some degree on my mind. If you are part of a family dynasty and the elder members of that dynasty don't want to retire but also aren't dying, what does that mean for maybe five generations of adults who are all now alive and want to be a part of the decision making?

Those scenarios are intended to help you explore next-order impacts. They read like fiction. I think the people who skimmed the introduction to that set of chapters, that part of the book where we explain why we are doing this and the difference between a speculative scenario and a strategic scenario, got a little confused. We have seen a little bit of criticism here and there, people saying, "Why are you writing science fiction?" But these scenarios are really effective tools to help you calibrate how you are thinking about the future.

TATIANA SERAFIN: I thought it was super-effective. It really made me think of how society needs to change and the things that we need to do now. Again and again you say in the book that we have to think about it now on the state and federal levels but also at a local level.

You give an example of how we need to educate ourselves, so let's go to the misinformation, information, and how we educate ourselves situation. There is a situation where a rule came down and a county board or a local board had to make a decision on how to apply something, but they were not scientists and they didn't have the tools to make the decision. Maybe can we talk about how we arm ourselves with more and better information about synthetic biology and about the future?

AMY WEBB: This is really challenging. I was sort of sitting back watching people's reactions to coronavirus when it was first happening. It is a very natural response to be fearful. For people who were around at the beginning of the HIV/AIDS crisis there are some parallels that I was observing as we were learning about this new virus.

I think science continually becomes politicized, which is unfortunate. One of the data points we offer in the book was from a Pew study that was done in the middle or beginning of COVID-19. It's fascinating. There was a survey done on "Who do you trust the most?" "Scientists" were right up there with "my spiritual, religious, theological leader" and "teachers." When that same group of people was asked whether or not they trust science, those numbers fell pretty sharply. So there is this delta between our trust in scientists and our trust in the work those people are doing, meaning the science. That is a contradiction I think worth noting because it helps us understand a little bit the situation that we're in right now.

The book launched in February. Just after it launched I was doing a big media tour, and I agreed to be on this show Coast to Coast AM. Some of you may listen to that show. If you are not familiar with it, it is a show that airs coast to coast in the middle of the night. I think I was on at 3:00am or 2:00am or something like that, my time.

I got a variety of phone calls that night. I am really grateful that I had that opportunity. I am really glad that I did it because I think we are not listening to people, especially when it comes to scary things like new viruses. When it comes to COVID-19 there was a lot of yelling at people or chastising people, but there wasn't a lot of listening. Again, I am talking not like one-on-one—I think a lot of people were doing a great job listening to each other—but I am talking more about on a global level, especially as it relates to media, I don't think there was enough listening and empathizing.

That night towards the tail end—I was getting a little delirious because it's the middle of the night and I'm tired—somebody was concerned that they thought they could get COVID-19 from gardening, from their soil; she was genuinely concerned, and I felt it.

Rather than shooting her down or telling her something different, I said: "Here's how I understand things," and why she didn't want to get the vaccine. I said, "Do you play video games?" She played video games. I said: "Cool."

I love this game called Zelda. I love most of the iterations of Zelda. My family also likes to play Zelda. I am now super-busy at work so I don't get to play. I am not a time millionaire anymore, I don't have endless hours to play Zelda, and my family members log onto my account and play as me, which I find incredibly annoying. When I go back to sit down I am in some new realm; I have tools, but I have no idea what they are or how to use them, and I am confronted with things on my path that may be benevolent or they may be monstrous—I don't know.

At one point, there is this blob in front of me, a gelatinous-looking transparent blob. The music? Not quite sure; maybe it's bad, maybe it's okay. The problem is I don't know what to do with the blob. I don't know if I should cook the blob and eat it or I don't know if I should kill the blob. I don't know what that blob is because I don't recognize it.

Later, when I see my family they give me the instructions: "Oh, by the way, that blob is totally dangerous. It looks benign, but it will absolutely kill you. You have to use this tool in your tool belt, you have to go 1, 2, 3, and that's how you kill it." Once I had the instructions I'm good to go. I have all this stuff. I just don't know what to do with it.

That's what the vaccine does. The vaccine is the same instructions that my totally uncool family members who went ahead in the game gave to me. The stuff was already inside me, I already had the tool in my quiver, but what I lacked was the information. The vaccine is just the information you need to recognize the blob and kill it the right way. That's all it does.

I am just relaying this because after I explained it to her I could hear in her voice that she felt relieved. I think she might have actually gone and gotten a vaccine after that. It totally changed her perspective. I never used a science word, and it wasn't because I didn't think she would understand it. I am sure she would have understood it, but it wasn't the right thing to say in that moment.

This is my concern with the synthetic biology field. First of all, nobody knows what the words "synthetic biology" mean. That's like industry branding, and I don't think it's great. That already doesn't make sense. Also it reminds me of Frankenstein, The Island of Dr. Moreau, or Jurassic Park. The whole thing sounds bad to me.

We are going into this with some problems on the heels of a horrific misinformation catastrophe as it relates to COVID-19 and vaccines. Again, now is the time to do planning so that we have a strategy to manage the flow of information and how we are going to have these conversations.

I keep talking about Dolly the sheep, but a lot of people don't remember Dolly the sheep.

TATIANA SERAFIN: I remember Dolly the sheep.

AMY WEBB: President Clinton had to have a press conference and the pope had to have a press conference to calm people down. Collectively we lost our shit as a planet when that announcement was made in the 1990s. All these years later we don't have demon spawn running around outside trying to kill us. We have new therapeutics, medicines, materials, and a whole bunch of other stuff that never would have actually happened otherwise.

We need to learn our lessons from Dolly, we need to learn our lessons from the early days of the HIV/AIDS epidemic, and we need to learn our lessons from how we managed the flow of information with COVID-19 because the stuff that is on the horizon is going to challenge people's thinking in significant and profound ways, but we can't wait because—again that is the reason that we wrote the book—now is the time to think.

We want to have public debates. We want people to have a dialogue. We want people to challenge what we have written. As long as you have data, it's fine. But now is the time to do that, not when the next bit breakthrough happens or the next big announcement happens and everybody is under duress because of fear.

NIKOLAS GVOSDEV: I think there has been a great conversation in the Chat about really how the extent to which the COVID-19 crisis in the United States demonstrated a lot of these vulnerabilities—the lack of foresight, misinformation, the way in which "I believe the science" but really it also became "as long as I agree with the science." Science of course is always about testing hypotheses and coming up with new data, and yet people were clinging to a science, say, of March of 2021 or September of 2021 when it reflected policy things.

Some questions have come in. Elsie Maio asks: "Are there multinational or national standards for foresight that you find encouraging that we can build on?"

Bojan Francuz talks about Policy Horizons Canada. Is that a way forward?

Michelle Mattei talked about what the European Union has been doing. Do you see elements there? In particular, we have had some political figures—I'm thinking particularly of Nils Schmid in Germany—talking about the United States, Europe, East Asia, and India coming together to form a technology coalition moving forward. Do you see some positive sides where this could go forward?

I also want to throw in another question: When we talk about these international coalitions—because several people have raised this—are we really talking about the wealthier countries? Where do the poor countries fit in the grand scheme of things?

AMY WEBB: There were four points in that. Let me answer the last point first because that's the easiest.

Yes, obviously we are only ever talking about wealthy countries. The emerging markets, the developing economies are always last. Yes, it would be wonderful and useful if in work that is related to foresight or to the future of technology—the future of SynBio, biotech, whatever it is—that we bring these other stakeholders to the table and recognize that they are part of the planet too. I think that would be wonderful.

On the second issue having to do with global coalitions, there are lots of examples especially when it comes to AI. I proposed what I call GAIA, the Global Alliance on Intelligence Augmentation, in my last book, which was The Big Nine: How the Tech Titans and Their Thinking Machines Could Warp Humanity, with a very robust description of what that would look like and how it should function. Since that book was published there have been variations of that proposed, and I think in the European Union there is some form of that, and I did a little bit of advising there for a few minutes. I think some version of that is moving forward. I think also the United States has launched some version of this. I don't know where it is yet. Honestly I haven't followed the development on that.

The bottom line is that everybody has a framework, a set of recommendations, and an ethical guideline on AI now. I mean everybody. The problem is that none of these things are really enforceable. It is about getting the right power brokers together. It's like everything: You have to get the right people into the right room at the right time, incent them to act, and create some type of framework that creates unattractive circumstances if they deviate. So that's that. But it is really hard to get alignment on these things, especially when you have a global hegemon like China that is pushing in its own direction and through the Belt and Road Initiative collecting signatories and others along the way.

Somewhere in the middle of that there was a question about national foresight offices. Yes, Canada has Policy Horizons Canada. There are the Nordics. There is a lot of foresight activity, especially in Finland. Foresight is part of governmental activities in Asia. So there is actually a lot. There is also a federal foresight community in the United States that various people belong to.

From my vantage point what is lacking in the United States is not an enthusiastic base for foresight within the federal government, but some type of coordinating office that is cabinet-level perhaps or something equivalent, that has real staying power, some type of enforcement mechanism, and has the political capital and attractiveness that, regardless of which party is in office at that moment, the "cool kids" are in that office and there is a net benefit to helping that work continue. From my point of view that is the missed opportunity. That's what is missing.

TATIANA SERAFIN: I think that there is so much more that we could talk about. This is a really excellent book.

Thank you so much for joining us. The Genesis Machine: Our Quest to Rewrite Life in the Age of Synthetic Biology. Some great recommendations and the start of a discussion that I think we will be talking about for years to come. Let's open the discussion now, today. Get the book, read it. It's wonderful. It's such a great read.

AMY WEBB: Thank you very much.

TATIANA SERAFIN: Thank you for joining us today, and we hope to have you back when these offices exist.

AMY WEBB: Thank you so much for having me on the show, and to everybody for being here with us.

NIKOLAS GVOSDEV: Thank you, everyone, for joining us tonight.

As Tatiana said, please continue the conversation @DoorstepPodcast on Twitter. No reason for it to stop this evening, although we will have to bring this part of the program to a close.

With that, thank you all for attending, and we wish everyone a good evening.